JavaScript can be a very powerful tool in a web developer’s kit for producing highly-interactive, polished, responsive content. It runs in almost all browsers, across almost all devices, almost the same way on each. It can be used for data collection, such as AJAX form submission, streamlined data delivery, via infinite scroll or AJAX retrieval, and it can be used for complex interaction and display using canvas or other front-end technologies.

However, because it relies on the user’s system, and not the site system, for all processing, a web developer should keep a careful eye on the performance of their JavaScript code. In particular, this should be true for code that should be nearly invisible and fluid as far as the user is concerned, and even more so if that fluidity is required to exist in a mobile context. Devices with limited processing capability or memory may perform tasks significantly slower, temporarily halting the browser’s control and making it appear stuttering, or even locked up, in the user experience. If such a delay lasts long enough, many browsers will even present the user with an opportunity to terminate your executing script, breaking your intended site flow.

In the case study below, our client informed us that mobile users, particularly those using Chrome on an Android device, reported dissatisfaction in the site’s performance in an infinite scroll context. It would stutter, lock up, and then catch up shortly resulting in the content jumping around. It was disorienting, it was confusing, and it had a serious potential to reduce traffic, both short and long term. Through research, profiling, and careful optimization, we were able to preserve the entire user experience while improving the performance of that experience on all devices, most particularly lower-powered devices such as phones and tablets.

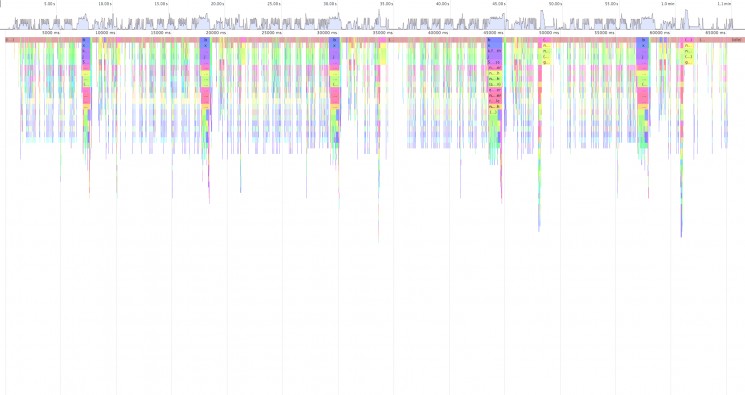

Site profile of Foodgawker before optimization

In looking at the graph, the important part to watch for user interaction is the top bar. Where it is brown, in this diagram, is where the browser has control to update itself and interact. The more brown you see in this chart, the more responsive the site will appear to the user. Other colors symbolize JavaScript holding control of the browser, and this means that updating the screen or responding to user interaction is often halted until the script finishes executing. Long periods where the color is not brown results in stuttering, or locking up, in the user experience. The thickness of each bar, left to right, is the amount of time spent in that state. In this case, near the end, we were taking up to 2.5 seconds, or more, before returning control to the browser. Because this is not a user-initiated action where they expect delay, the fact that the browser stops responding for a couple of seconds before continuing is extremely jarring and unpleasant as an experience.

The questions we had to answer were why it took so long, and what we could do to minimize both the amount of time that it spends processing our behaviors, as well as the amount of time our behaviors took away from the browser at once (resulting in visible locks and stutters in the browser interaction.)

Ultimately, we came to the following conclusions:

- Improve our jQuery selection and processing

- Allow multiple event handlers to thread and run asynchronously if other code does not rely on them.

- Reduce the CSS complexity of the site and improve CSS performance.

Our process

Improving jQuery selection and processing

The first quick gain, without changing existing behaviors, is to look at the jQuery selectors used in the site. It is important to remember that the jQuery selectors operate very similarly to the browser’s CSS selectors, with many of the same considerations for performance. For example, the right-most selector should be the most specific, when possible, in a selector string that contains multiple selectors. For example, you might change the selector “div.wrapper-class h1” to “div h1.wrapper-title” for a small performance gain. jQuery will also handle selectors quickest when using IDs, with class-based selectors next in performance.

The next thing to look at, in selectors, is separating the pseudo-selectors from primary forms of selectors. In particular “:hidden”, “:visible”, and “:focus” should be separated from others. The other selectors can be handled quickly through the browser’s JavaScript engine, and jQuery recognizes that. However, a compound selector such as “input:hidden” will not be guaranteed to run through the browser’s implementation to select the input, and may run entirely within jQuery. This can result in a much slower performance. When writing something like $("input:hidden"), it is often a very simple performance gain to do $("input").filter(":hidden") instead.

It’s also important to remember that such queries take time. If you’re going to repeatedly refer to a certain selection (such as “body”), store it in a local variable and query it only once. This will prevent re-running the query multiple times, often offering an extremely large gain over time on operations the site performs multiple times.

Creating asynchronous event handlers

This can be a subtle trick that can really make a site more performant, even if the code itself does not change. When event handlers are processed in jQuery, the handlers are run synchronously (in real time) and sequentially (one after another). Adding a handler will put it at the end of the list, but this order should not be counted on, so dependency shouldn’t be built between one handler and another without triggering an event in the first handler. However, this means that, if you have three handlers that each take 500 milliseconds to run, the browser will be “locked up” for 1.5 seconds in this alone. That is an extremely noticeable time to a user, particularly when this is supposed to be a silent interaction in a fluid state such as infinite scroll.

How, then, do you do the same work, but cut it down so that the browser only locks up for 500ms or so at a time? Through asynchronous handling. Your event handler can encapsulate functionality inside its own closure, and run that through a setTimeout() call. In doing so, you allow control to go back to the parent jQuery event, and eventually the browser, much faster by delaying action immediately. This allows it to get through all 3 handlers in something closer to 1ms right now, do a browser update, and then run them. Further, if the browser supports multiple threads, it can even now process these more or less simultaneously instead of synchronously, greatly improving the visual performance of the browser. The more often we get control to the browser, and the longer it stays there, the more fluid the interaction will be.

Reducing and optimizing CSS performance

It is important to understand that, particularly within a mobile context, your CSS can have a direct impact on your JavaScript performance. It is not directly intuitive, unless you understand the mechanics of browsers. When certain styles change, or content is added or removed to the document flow, it results in the browser running “reflow” and “redraw” behaviors. In these cases, the browser needs to update and calculate the positions of everything in the DOM after the element being modified, and it does this every time that element is updated, changed, added, or removed. When it runs these actions, it will need to handle all of the CSS rules again, and it will need to update the DOM and evaluate each element according to those rules.

Because of those changes, the larger your DOM gets and the more complex your CSS is, the longer the browser can take doing reflow and redraw actions. During these actions some browsers, most notably Chrome on Android devices, may lock up in reporting properties to JavaScript when they are requested, including page dimensions and scroll positions of elements. This can create a nasty chain effect where the browser loses control, and the ability to update, to JavaScript that is waiting for the browser to finish its last redraw or reflow action so that it will return the property values. This results in much longer wait times on even simple interactions in JavaScript, and can be a leading factor in a stuttering appearance to what should otherwise be a smooth and flowing interaction.

CSS can be simplified most easily by ensuring that rules are as lightweight as possible, and that any deprecated rules that are no longer applicable to the site are removed. You can go through further optimization in the same method as mentioned above for jQuery, leveraging the right-to-left specificity within your CSS rules. This could mean that it may even be valuable, in limited cases, to split your CSS into multiple files and ensure that you’re not loading rules in a scope that you do not intend to use.

It is also important to avoid JavaScript expressions in your CSS file (something that currently only Internet Explorer supports), as these can be incredibly slow, and will run any time the view is resized, or content updated.

The results

Through these changes, we were able to return control to the browser much more often, and much more quickly, than we had prior to these updates. There are still occasional issues in reflow and redraw as the DOM gets heavier, in particular in ad code. Further optimization and changes could improve this as well, though there sometimes are constraints that are outside our control (such as optimization of third party ad scripts). More improvement could also come from removing features and simplifying the overall process, potentially, with client cooperation. However, these updates already offer substantial improvement with no feature loss, resulting in a happier client and happier users on the client’s site.